2024

v0 by Nathan

Generative UI. An (old) clone of Vercel's v0.Table of Contents

Introduction

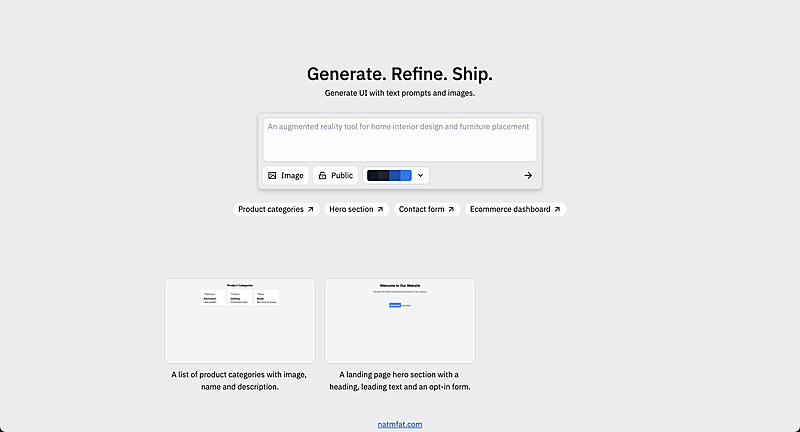

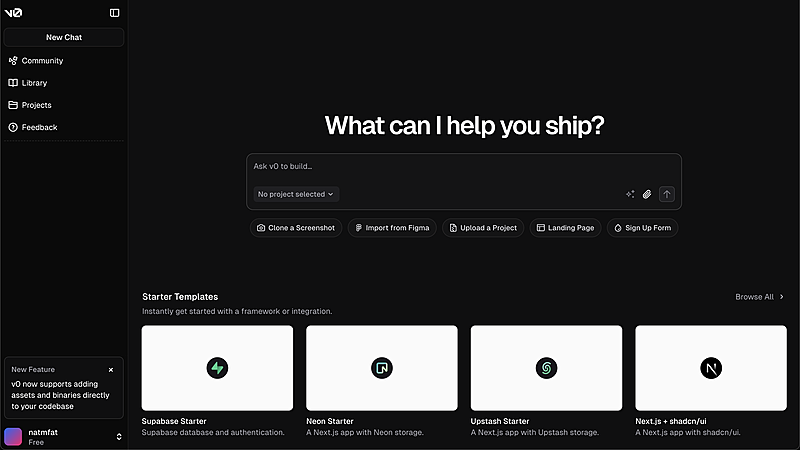

AI is pretty much everywhere. There's hardly a week without some kind of AI news. New models with innovative architectures and cheaper inference costs. Pledges for massive datacenter and infrastructure investments. Some new domain that we slapped AI on (sure we've seen AI in healthcare, but have you seen AI in a smartwatch for healthcare?), or simply significant improvements in existing domains like image generation. With these advancements come a slew of "slop" products. For the most part, these are just complicated wrappers over existing AI models, making them even easier and more accessible to use. For example, while there isn't a practical difference between just using ChatGPT directly and using an AI IDE like Cursor, the built-in integration and tooling dramatically alters user experience and expectations. Especially in software engineering, there have been entire new cultures and definitions that have developed specifically as a response to this AI (over) saturation - vibe coding and a new breed of founder, to name a few. Unfortunately, a large number of models (and AI apps) are closed-source, cost a lot of clams, or are simply infeasible to run anywhere except a cloud hosting provider due to their massive size. Not to mention the fairly self evident dangers of AI built and run by a select few billion dollar tech giant monopolies. However, thanks to the work of Ollama and research teams that release their work, it is easy to find decent models that are not only open source but small enough to run on consumer hardware. These emerging technologies and applications have been a massive inspiration and motivation for this project. How can I contribute to this ecosystem of AI apps, using the tools that Ollama provides? How can we improve the experience of developers locally, using their machine? Ultimately, I decided to recreate v0 by Vercel. I thought the design was stunning, and it would be a great way to test my new libraries that I'll describe in further detail later.Features

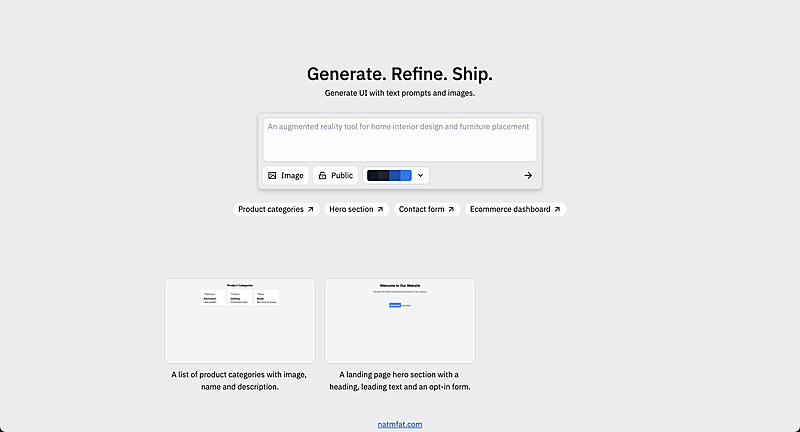

- A similarly styled input field

- A way to view what Ollama spits back out at us

Technical Stack

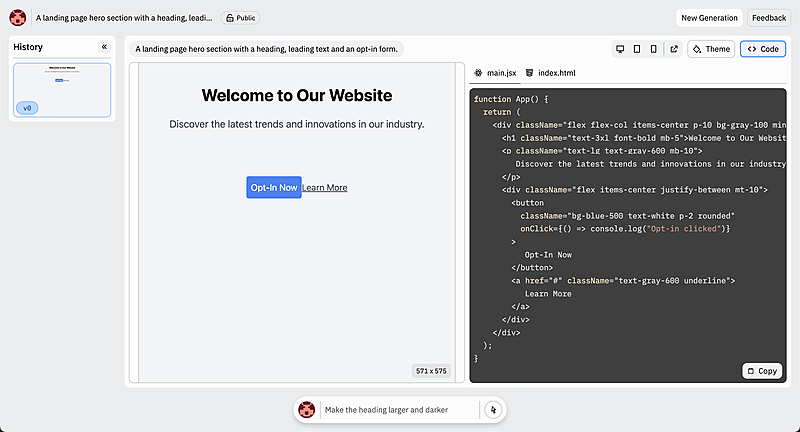

I used the libraries and frameworks I was most familiar with to help speed up the development process. I used Remix, React, TypeScript, and of course Ollama to provide the necessary models and API service. However, v0 was so fun (and so jank) to make because it uses so many of the tools and packages I developed personally. Instead of plain Remix actions, I used my own remix-endpoint for typesafe forms and mutations. Instead of Prisma, I used shitgen for my database. Instead of shadcn/ui (or whatever Vercel is using), I used my own design system. Honestly, using my own packages in common scenarios throughout my own projects has exposed loads of bugs that I wouldn't have otherwise found. For example,shitgen didn't have any support for enums, was improperly generating types, and failed to create certain queries involving limits and ordering. Not many people end up using my work because I have so little traction online, so it's important to me that I test my own work (with actual tests or in hands-on projects) to ensure their stability.

Challenges Faced

Finding a small model that fit my requirements was surprisingly difficult. I needed something- small and fast enough to run on consumer hardware (my base Macbook Air)

- smarter than rocks just to follow what the prompt demands

- only output code, no text

qwen2.5-coder:1.5b even though it's pretty bad at making user interfaces compared to ChatGPT. It's fast and kind of understand my prompts. It knows basic React and Tailwind, which was great for rendering a preview inside of an iframe.